I think for anyone who’s built or worked on something, there’s a tendency to wonder if something could have been done better or what could have been done differently.

Gabe Newell of Valve has said that his favorite game from his company was Portal 2, if only because he was much more involved in the development of all the other games Valve has created. He said while playing the company’s other games he would notice areas where content got cut or things didn’t go perfectly according to plan.

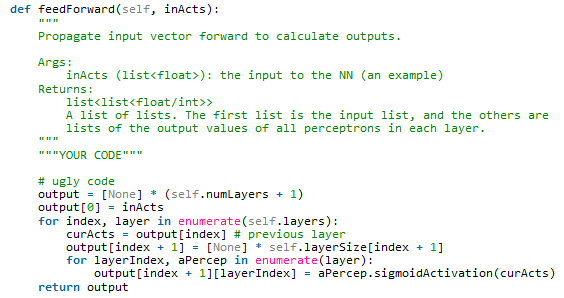

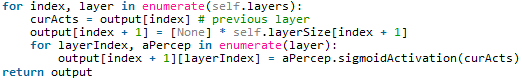

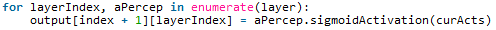

If you’ve built a decent amount of things, you may come to a point of reflection where you have that one thing you’ve built that works, but you’re unhappy with. For me, it’s this piece of code:

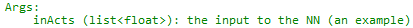

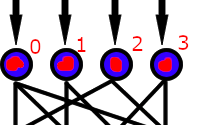

This was for a project in my intro to AI class where we were writing an implementation of a feed forward neural network (woah!). This sounds really complicated, but basically all it was was stringing along a couple of nodes (perceptrons or like, simple neurons almost like you have in your brain) like the picture below and then doing some fancy math to describe how a value moves through the network.

So, This function described how the values would propagate from one layer to the next (for instance, from the blue layer to the green, or the green layer to the orange).

How did I know this code was bad?

I had a close friend who was returning from a sports tournament and was trying to complete the assignment the day it was due. We had our textbooks open to all the formulas we needed, and I was practicing some form of “clean room coding” where I would help him understand the concepts and algorithms without either of us seeing each other’s code. Everything was going perfect until we reached …

(it haunts me)

Also,

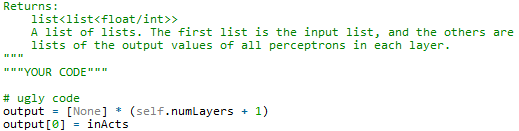

“””YOUR CODE”””

# ugly code

So, in a moment of pure horror and frustration, we broke the “clean room” to both look over this piece of code. We spent maybe 15-20 minutes trying to re-implement it, but none of the attempts seemed to work. It seemed that we couldn’t really figure out how this code worked, and couldn’t seem to replicate its effects.

If this (or something like it) was actually running as live code somewhere and causing errors, I’m sure someone would be pulling their hair out.

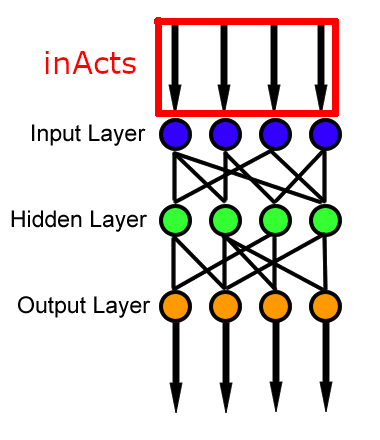

What can one do in a situation like this? Well, you can start from the input and the output of the function and try to figure out how it works:

Cool, so uh what? This means that we are given a list of decimal values (like 3.14159…) that represents the input values for our input layer like so:

And, we also see:

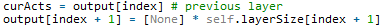

Cool! So now we know that our function outputs a 2 dimensional array (essentially, an array containing arrays) where the number of arrays is the number of layers plus 1 (n+1). We also know that inActs is the same as the 0th (computers count from zero) array of our output. So, we’ll make a guess:

This looks pretty good! Lets assume this is true for now and see if we can figure out the rest:

Ooh, a scary nested for loop. For the top one, we should try to think of a real example for it to run on, that way we can think about how it will start and when it will stop. So, if we have 3 layers like in our picture, it will count: 0, 1, 2.

So, if we pretend (in our heads) to run it on 0, 1, and 2, we can see it will start at Output[0], then go to Output[1], then finally Output[2]. We can also see that at each step, it “looks ahead” and builds the next output array. At 0 it builds the 1th array, at 1 it builds the 2th array, and at 2 it builds the 3th array.

Whew.

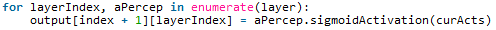

So now, let’s look at the nested for loop, or the loop that occurs each time the top loop occurs:

Wow. This is a doozy. First off, we know that the sigmoid activation function is the secret sauce that those crazy Canadians figured out long ago to make neural nets go, so let’s just say that it allows a perceptron to take a bunch of input weights, do some math, then spit out a value towards all the other perceptrons in the next layer. Those values are probably what we’re building in our 2d output array.

We also see that with the for loop looking for a (layerIndex, aPercep in enumerate(layer)), so this means it iterates through a layer and gets a layerIndex starting at zero and a perceptron object that is nicknamed “aPercep” for your reading pleasure. It probably looks like this:

So if we look at that code block again…

We can see that it’s building the index + 1 th layer by taking in its input weight (from curActs, or, the previous layer), shoving it through the perceptron à la the secret meat grinder that is the sigmoid activation function, and then putting it as the output value of the perceptron for every perceptron (0, 1, 2, 3 in our example) in the layer. Subsequently, this output value from the perceptron is used as an input for the perceptrons in the next layer.

Now if we did a run through in our heads, we could see that these two lines would be building the activation values for the next layer, then it would end and the top for loop would increment, and then finally we would use the values we just generated to build the next layer and repeat until there are none left.

Cool!

So, if this code works what’s the problem?

Well, I wrote the code only about one or two days ago and couldn’t figure out how it worked in 20 minutes. When you’re “in the zone” and writing code like this, it can seem like it makes perfect sense at the time but can look horrible when you come back at it later. I think this may be why some people have the idea “everyone else’s code is ugly” because maybe, well, everyone’s code is ugly.

Some people subscribe to the idea of “self documenting code” or, the idea that code should be written in an intuitive way (with variable names, etc.) so that others can read along with it and get an inkling of what it does. I’m not generally such an optimist, so I like to add in the occasional comment for when I feel dumb and am looking over it later.

In a later post, we may take a look at how this code could be written in a different way so its a bit easier to read and understand.

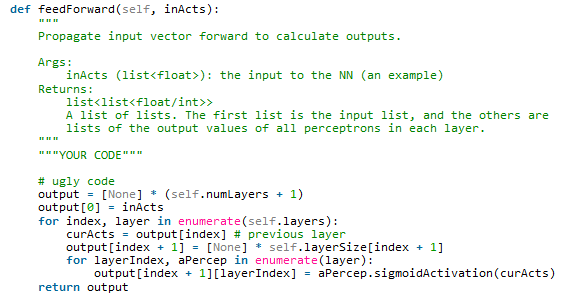

Edit: A commenter ceiclpl on reddit wrote a great alternative implementation of this function:

I really wanted to clean this up, and discovered it translates well into understandable code:

def feedForward(self, acts):

outputs = [acts]

for layer in self.layers:

acts = [aPercep.sigmoidActivation(acts) for aPercep in layer]

outputs.append(acts)

return outputs

Which much better describes what it’s doing. It just iteratively applies the activation function on a list, over and over, and retains the intermediate values.

I think this code example is also a great demonstration of the “programming as a means of expression” concept.

I’m used to living in “Java/C -land”, programming where objects are first class citizens and instead of making use of Python’s syntactic sugar for working with arrays, I did it the old-fashioned way with array indexing.

Thank goodness for our Pythonista ‘s out there, they’re a different breed

![]()