Previously, I’ve written on how easy Docker makes it to deploy standard “software in boxes” on a simple cloud server, saving a lot of the hassle that used to be needed for configuration and setup for running software.

You may be left wondering: what if instead of small services, I’m trying to setup a full scale application with many users? So, to answer this question we’ll zoom out, “think BIG”, and see what answers we can find.

In this article, we’ll examine the “paradigm shift” that has occurred in the software industry with containerized software and cloud computing. We’ll look at two technical teams that, with minimal resources, were able to build large, scalable applications by leveraging the deploy-ability provided by containers and open source software, scalability enabled by Kubernetes, and simplicity offered by cloud providers.

In addition, we’ll take a look at the direction the industry is headed for different types of cloud deployments, open source vs proprietary tools, and how to avoid vendor lock-in.

But first: a brief history of global shipping

In their official presentation slides, the Docker team likens the containerization of software to that of the containerization of cargo in global shipping. I’ve described before how nice it is that software containers are standard and easy to run, but today we’ll be looking at another important aspect of the container analogy: scalability.

WWII saw a level of industrialization, manufacturing, mobilization, and global shipping that had never before been seen. Towards the end of the war, the U.S. army began experimenting with shipping containers that could be packed once and shipped on any mode of transportation. During the Korean war a few years later, the U.S. army standardized on the CONNEX box system and was able to almost halve their end-to-end shipping time (later during the Vietnam war, they would scale to numbers of over 200,000 CONNEX boxes by 1967).

Commercial and business use:

Seeing opportunity, American businessman Malcolm McLean secured a loan for $22 million dollars in 1956 based on the proven U.S. army system (almost $207 million in today’s dollars) to buy and convert two large WWII oil tankers into container transport ships.

In 1956, most cargoes were loaded and unloaded by hand by longshoremen. Hand-loading a ship cost $5.86 a ton at that time. Using containers, it cost only 16 cents a ton to load a ship, 36-fold savings

Within 3 years of the first container ship running McLean’s business was profitable and expanding. By submitting his container design as a standard to the International Standards Organization (ISO), McLean had proven and popularized a new method of global shipping with standard, easy to handle shipping containers that is still in use today.

And now, software:

Relevancy?

Just as McLean’s containers removed the need for labor intensive “loading and unloading” of varied cargo across multiple modes of shipping by longshoremen, Docker containers are attractive because they promise that software will run in the same way on a laptop development environment as a (potentially massively scaled) production deployment. The software container only has to be built or “packed” one time.

Commoditization

I’ve written before about how the economics of software mean that open source back-end software infrastructure can be considered as a complement to front-end software product, and how smart companies commoditize their product’s complements:

McLean likely wanted to submit his container design to the International Standards Organization (ISO) because he had developed a product (container shipping infrastructure) and wanted to make his product’s compliment (the container standard) a “commodity” so he could load or unload his ships at any port. For similar reasons, the Docker team submitted their standard container run-time containerd as a free resource to an emerging organization known as the Cloud Native Computing Foundation (CNCF).

Actors in the “software space”:

This also explains why companies such as Google, Facebook, etc. release and contribute to so many free and open source software projects (FOSS). Their goal is to make back-end infrastructure resources a commodity, or in other words, it makes it trivial for them to develop user-facing product at low cost by having it backed by an ecosystem of free and open source software.

For such reasons, the Cloud Native Computing Foundation is emerging as a governing body to make standardized cloud computing a commodity as multiple actors in the software space are contributing to and utilizing extraordinarily powerful resources through free and open source software and cloud deployments.

This exists in contrast to the existing scheme where certain cloud vendors have proprietary product that has become somewhat standard in the industry due to the first mover advantage.

Member companies such as Red Hat have been banking on the idea that cloud computing patterns will become ubiquitous, cheaper, and open source.

Case Studies of “Cloud Native Computing”:

Introduction:

In military science, there’s a concept commonly referred to called “force multiplication“. Every battle needs troops on the ground in some shape or form, but the tools, environment, or conditions used by the troops can “multiply” their effectiveness on the outcome. For example, during the first Gulf War, the United States forces had GPS available as a force multiplier allowing armored units to navigate through unmarked desert while their adversary was confined to marked roads and navigable terrain.

The rise of internet and Software as a Service (SaaS) platforms could be considered one of the biggest “force multipliers” that has become available to software companies in the past few decades. Before, if you wanted to sell shrink-wrap software, you needed to do customer research and develop your software, really wrinkle it out until it was ready to ship, then wrap it in a box and get someone to sell it for you on a shelf. Take it or leave it.

With the internet, software can be provided:

- Instantly and often

- To more users

- At a lower cost

In much the same way, we’ll examine through two case studies how the rise of cloud computing technologies has likewise acted as a “force multiplier” for businesses in the software industry by allowing small teams to build large, heavily scalable applications, at a lower complexity.

Case study A: Robinhood

With a team of just two DevOps engineers, the Robinhood team sought to create a full-fledged stock trading platform for day-traders as a simple mobile app with a cloud infrastructure back-end. They built the platform in secret, then announced it on several online forums. They had a waiting list of over 1 million ready to use the app at launch.

It’s unclear whether or not the launch used Amazon’s container solutions. Either way, it’s clear that the scalable, standard, and easy to integrate solutions provided by cloud providers such as Amazon’s AWS make it possible for even a small development team to have a greatly out-sized impact on a software market.

There is a risk; however, with tightly tying in your fortunes to that of a giant like Amazon. Much like how you can go to Walmart to buy both your underwear and your groceries, Amazon has worked hard to make itself the “one-stop shop” ecosystem for cloud services, going as far to even mildly clash with commercial open source vendors to provide its own product. Using the AWS “one-stop shop” can work well for a company just starting out, but in the later stages of a business highly coupled cloud costs can be a serious competitive business disadvantage, as companies such as Lyft have noted.

Lessons from Robinhood:

- A small team can have a huge impact by using emerging tools

- It’s okay to do initial preparation in secret when no one’s operating yet in the market

- Operating costs can be high when using the “one-stop shop”, but that can be okay sometimes if it saves time or money in other areas.

Case Study B: Pokemon Go:

To fully wrap back around to the main topic of scalability enabled by software containerization, container orchestration, and commodity open source software, we’ll now take a closer look at Niantic’s launch of Pokemon Go in 2016, running on Google’s Cloud Platform (GCP).

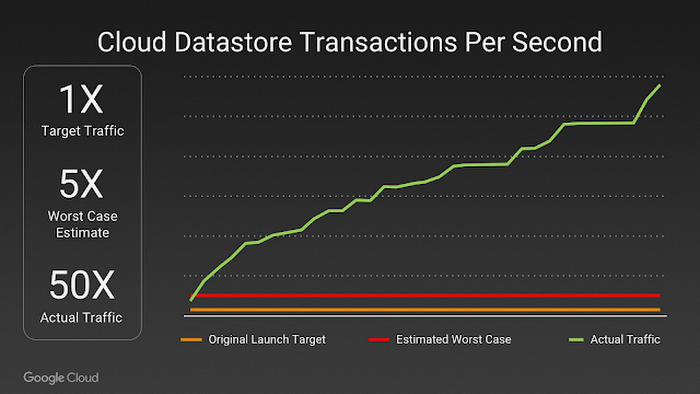

As Google director of Customer Reliability Engineering Luke Stone observes, “a picture is worth a thousand words” in this graph of activity during its launch:

With Google’s engineering team working as “insurance” in concert with Niantic’s during the huge launch of Pokemon Go with millions upon millions of players, Google’s open source container orchestration platform Kubernetes allowed the teams to scale the back-end out to absurd proportions on the fly.

In addition, Google and Niantic’s engineers changed both the container engine orchestrating the back-end deployment and the network load balancer while the game was live, a feat they compared to changing out an airplane’s engine while it was running.

When the game’s launch extended to Japan, the number of users tripled from the launch in the US two weeks earlier.

All this was made possible by containerizing the back-end that serviced the app. The network load balancers sat in front of the servers working as a “traffic director” to route customers towards multiple back-ends and the container orchestration provided by kubernetes made sure there were enough back-end container instances for customers to use and that the instances were healthy.

Lessons from Pokemon Go:

- Plan “big”, and buy “insurance” just in case

- Set up your back-end so you can scale

- If all goes well, keep going!

Future?

Tools such as Hashicorp’s open source Terraform are seeking to make it possible to coordinate deployments across multiple clouds. Through this tool, the features of the individual clouds are made irrelevant in favor of a higher level “infrastructure as code” abstraction. This can make it easier to shift or extend deployments from one cloud to another, and can provide companies with options as they expand and for flexibility without being tied to a single cloud’s environment.

In addition, the container technology ecosystem that continues to evolve around container and container orchestration technologies is further cementing the value of these tools, and proving that further value could likely be leveraged from a stack implementing containerization as complementary technologies emerge.

Conclusion:

Cloud computing is hardly in its infancy, but there are technologies, governing bodies, and software companies that are seeking to make it ubiquitous, standard, and cheaper. In addition, the tools that have arisen as many actors have operated in this space have added new opportunities for even small teams to have an enormous impact on a large number of customers.

![]()

This is great stuff. Thank you so much for sharing man.