Shucking

Installation

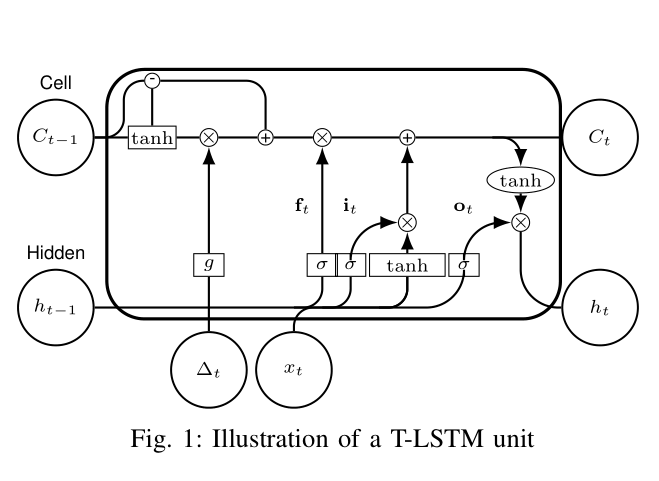

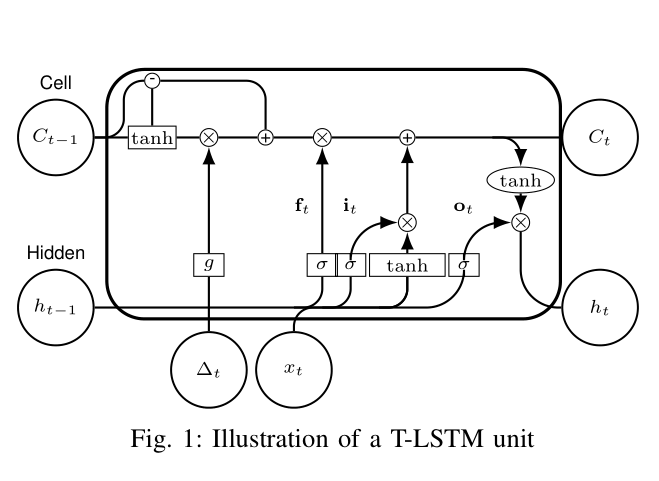

tape to cover deprecated 3.3v pins so drive doesn’t receive a “shutdown” signal

Setup

Monitoring temps during burn-in

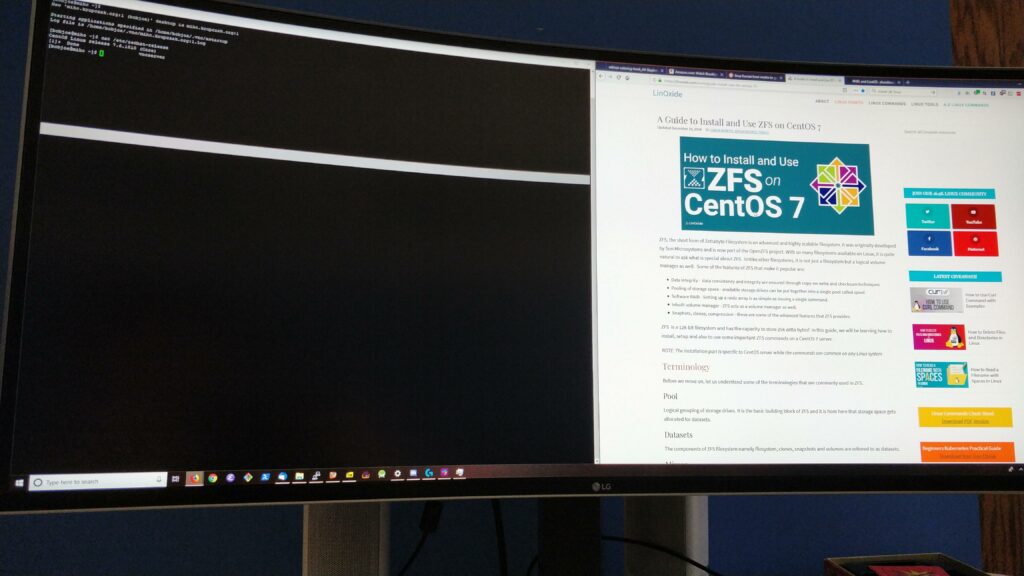

Summer break has come, and that means it’s time for personal projects! To get ready, I’ve upgraded the storage array on my home server from 4TB (actual) to ~32 TB (raw). I’m using sub-consumer hardware by taking apart four 8TB WD easystore backup drives to cut down on cost, and I’m using zfzonlinux with CentOS to build a fault-tolerant drive pool with raidz so that if one disk fails, my data will remain intact. ZFS also provides many nice features for snapshotting, data integrity, etc. and the raidz tends to perform better than typical raid solutions. Now that it’s completed, I have about 21TB of space to work with.

![]()